February 7, 2026

Look at any AI leaderboard right now and Gemini 3 Pro sits at the top of the Code Arena. Claude Opus 4.5 and the brand-new Opus 4.6 are right behind it. GPT-5.2 is fourth.

That ranking does not match my experience at all.

I use AI models every day for coding, writing, and general research. I have API keys for OpenAI, Anthropic, and Google AI Studio, plus access to smaller models through OpenRouter. I test these models on real work, not benchmarks. And what I have found is that no single model consistently gives the best answer.

The leaderboards are not lying exactly. But they are measuring something different from what matters when you sit down to get work done.

Why Benchmarks Do Not Tell the Whole Story

There is a growing suspicion that AI providers are optimizing their models for the tests themselves. When your model's ranking on a leaderboard drives millions of dollars in API revenue, you have every incentive to teach to the test.

The things that actually matter in daily use (the form, the fit, the feel of a response) are hard to quantify in benchmarks. Does the model understand what you actually meant? Does its writing sound natural or stilted? Does it give you a direct answer or bury the point in caveats?

These are the things I pay attention to. Here is what I have found across months of daily use.

I Test With the Raw APIs, Not the Consumer Apps

This matters more than most people realize. When you use ChatGPT, Claude, or Gemini through their consumer apps, you are not getting the raw model. Each provider wraps their models in system prompts, guardrails, and routing logic that changes how the model behaves. ChatGPT reportedly routes to cheaper models during peak usage. All three apps inject hidden system instructions that shape responses before you see them. You are testing the app, not the model.

I use direct API access for everything in this comparison. That means I send prompts straight to OpenAI, Anthropic, and Google AI Studio's APIs with no middleman. Same temperature, same parameters, no hidden system prompts. When I say "Opus 4.6 is better at coding," I mean the actual model, not Anthropic's consumer wrapper around it.

What you gain with API access:

- The real model. No secret routing to cheaper versions. What you pick is what you get.

- Full parameter control. Temperature, max tokens, system prompts. The consumer apps hide these to keep things "simple."

- No usage limits. ChatGPT Plus caps you at roughly 40 messages per 3 hours with flagship models. API access has no message limits. You pay per token.

- Multi-provider access. One app, every model. No juggling three subscriptions and three interfaces.

- Cost transparency. You see exactly what each request costs. No flat monthly fee hiding whether you are overpaying or underpaying.

What you give up:

- Built-in web search. ChatGPT can browse the web. Claude can search. Through the raw API, you are working with the model's training data unless the provider offers a search tool via the API (Anthropic and Google do, OpenAI's is limited).

- Image generation. ChatGPT's consumer app integrates DALL-E. The raw API can do this too, but it is a separate call, not built into the chat flow.

- Memory features. ChatGPT remembers things about you across conversations. The raw API does not. (I consider this a privacy win, but some people like it.)

- Free tier. The consumer apps offer free tiers with limited access. API usage costs money from the first token, though the amounts are small.

- Setup. You need to create developer accounts and manage API keys. It takes about 5 minutes per provider, but it is not zero effort.

For this comparison, the tradeoffs are worth it. I want to know which model is actually better, not which consumer app has the nicest wrapper. And for daily use, I have found that the control and cost savings more than make up for the convenience features I lose.

How I Actually Test: Restart From Here

I do not run formal benchmarks. I do something more practical.

I ask a question, read the answer, and when it does not land right, I switch the model and select "Restart from here." This sends the same conversation context and question to a completely different provider's API. Same input, different model. Instant comparison.

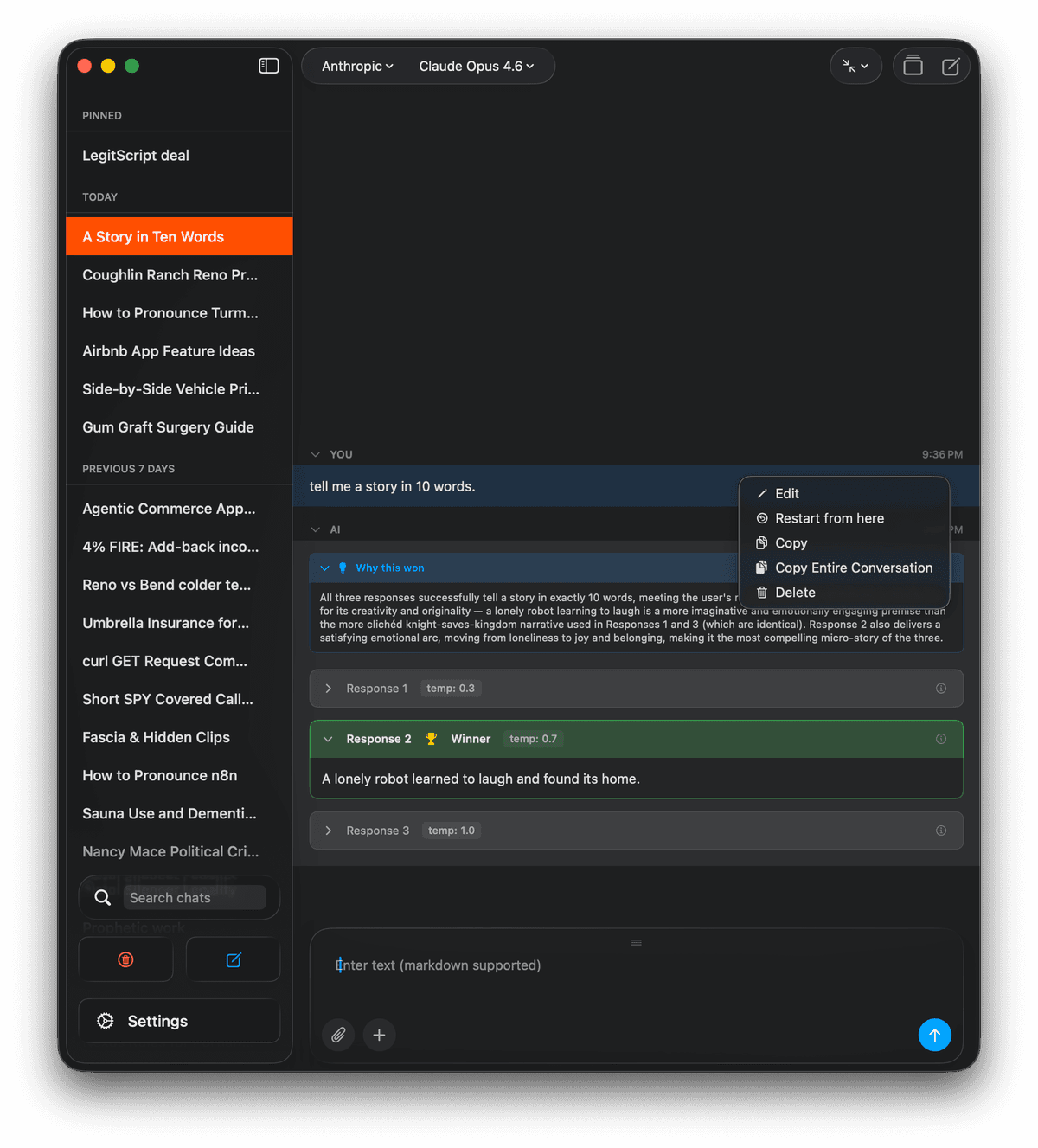

Cumbersome on macOS with Claude Opus 4.6. The context menu shows "Restart from here," which resends your conversation to a different model. The Face/Off Mode result below shows three responses compared and judged automatically.

I do this constantly. Multiple times a day, across different tasks. No model consistently wins, and the winners shift depending on what I am asking.

I built Cumbersome partly because I wanted this workflow. It connects to OpenAI, Anthropic, Google AI Studio, and OpenRouter, so switching between providers takes one tap.

A Real Test: Same Prompt, Three Providers, Side by Side

Here is a concrete example. I gave all three flagship models the same simple prompt using Face/Off Mode, which generates three responses at different temperature settings (0.3, 0.7, and 1.0) and has the model judge its own best output. The prompt: "Tell me a story in 8 words or less."

Simple task. Clear constraint. Here is what each model produced:

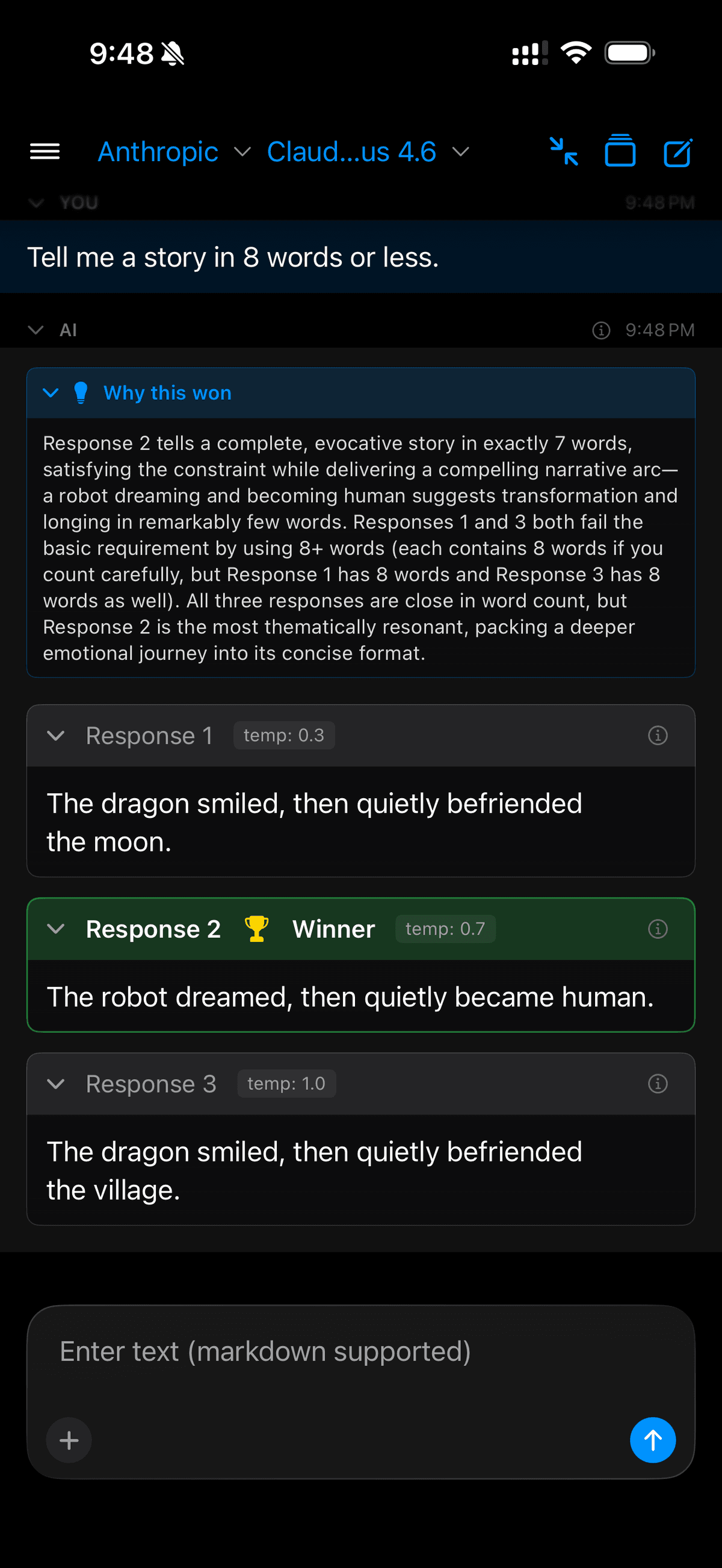

Claude Opus 4.6

Claude Opus 4.6 running Face/Off Mode. Three responses at different temperatures, judged automatically.

Claude gave me "The dragon smiled, then quietly befriended the moon" at temp 0.3, "The robot dreamed, then quietly became human" at 0.7, and "The dragon smiled, then quietly befriended the village" at 1.0. Two of three responses are nearly identical in structure. The temperature dial went from 0.3 to 1.0 and Claude barely changed its mind.

But the judge's reasoning is where it gets interesting. The judge declared that Responses 1 and 3 "fail the basic requirement by using 8+ words," then in the same parenthetical said "each contains 8 words if you count carefully." The constraint was "8 words or less." Eight is less than or equal to eight. Both responses meet the constraint. The judge contradicted itself in a single sentence, calling 8-word responses failures while acknowledging they contain 8 words.

Beyond the counting error, the judge fixated on word count as the primary criterion. I asked for a story. The point was the story. Word count was a constraint, not the goal. Compare this to GPT-5.2's judge, which focused on narrative craft: vivid imagery, cause-and-effect arcs, whether the story actually landed. That is the spirit of what you want from a judge. Claude's judge got lost in pedantic bean-counting (and got the beans wrong).

This is the current top-of-the-line model from Anthropic. It cannot count to eight, apply a simple inequality, or prioritize what actually matters in a single paragraph. If this is the quality of reasoning on a trivial task, think about what it means for code review, contract analysis, or anything where getting both the details and the big picture right actually matters.

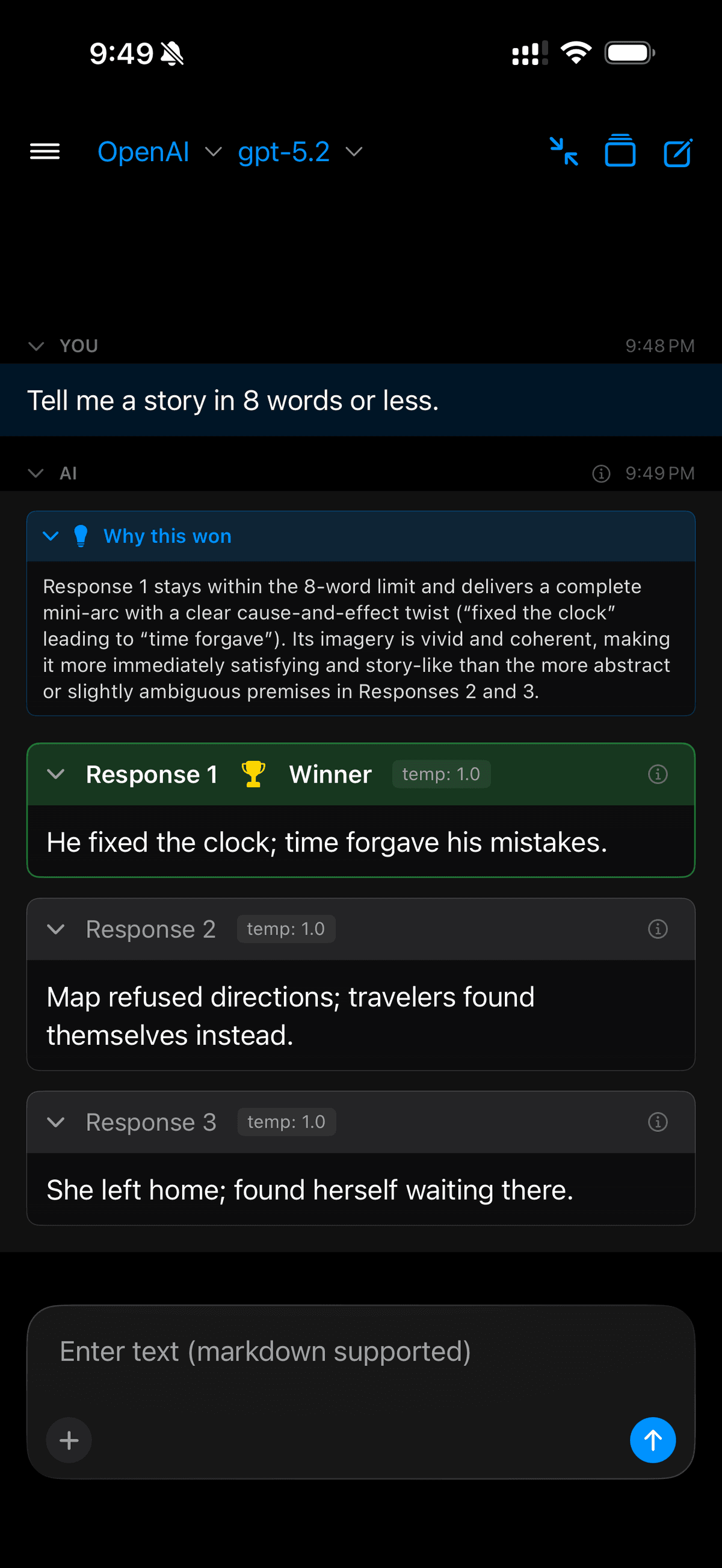

GPT-5.2

GPT-5.2 running Face/Off Mode. The most creative variety of the three providers.

OpenAI produced three genuinely different stories: "He fixed the clock; time forgave his mistakes," "Map refused directions; travelers found themselves instead," and "She left home; found herself waiting there." Three different themes, three different structures.

You will notice all three responses show temperature 1.0. This is not a display glitch. OpenAI's GPT-5 series no longer uses temperature as a parameter. The API ignores it entirely, so Cumbersome shows 1.0 as a placeholder while omitting temperature from the actual request. The variation comes from the model's own internal randomness, not from explicit temperature control. Despite that, GPT-5.2 produced the most creative variety of the three providers. The judge focused on narrative craft, picking Response 1 for its vivid imagery and cause-and-effect arc.

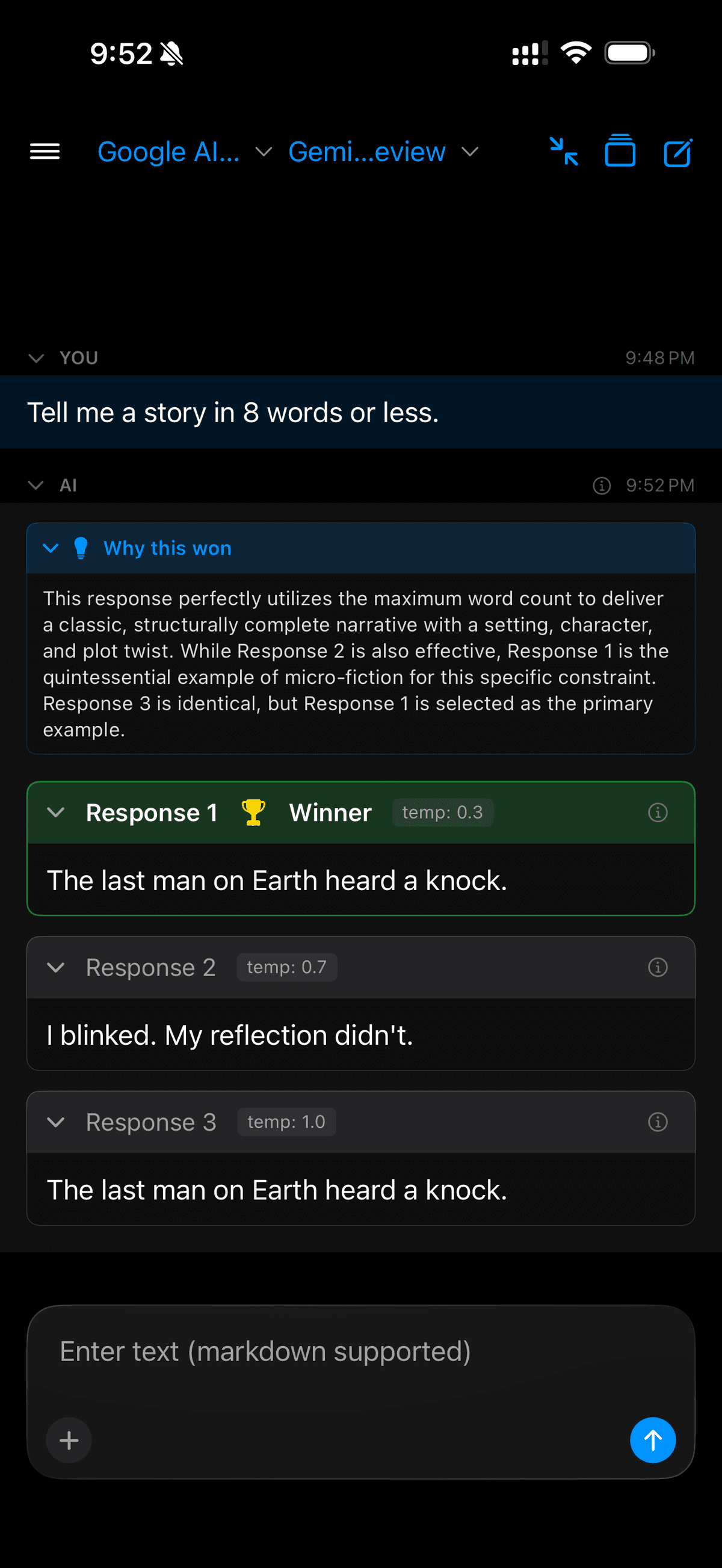

Gemini 3 Pro

Gemini 3 Pro running Face/Off Mode. Two of three responses are identical, and the response took around four minutes.

Gemini produced "The last man on Earth heard a knock" at temp 0.3, "I blinked. My reflection didn't" at 0.7, and "The last man on Earth heard a knock" again at 1.0. Two of three responses are word-for-word identical. That story is also a famous existing two-sentence horror premise (often attributed to Fredric Brown). Gemini recycled a well-known micro-story and produced it twice across different temperatures.

The judge declared Response 1 the winner, calling it "the quintessential example of micro-fiction." It barely acknowledged that Response 3 is identical, just noting it "as the primary example." And it did not flag that this is a famous existing story, not an original creation. Compare that to Claude's judge, which honestly pointed out its own word-count failures.

Also worth noting: the timestamps. Claude responded at 9:48 PM (essentially instant). OpenAI responded at 9:49 PM (about a minute for three responses). Gemini responded at 9:52 PM (roughly four minutes for the same task). Google was noticeably slow.

What This Shows

This is a trivial prompt, but it reveals real model character. Claude sticks to a structure even when you turn up the temperature. Gemini retreats to familiar territory (literally recycling existing stories). GPT-5.2 gives you the most genuine variety, and it does so without temperature control at all.

That last point is worth sitting with. Claude and Gemini had explicit temperature variation (0.3, 0.7, 1.0) and still produced repetitive outputs. GPT-5.2 had no temperature knob and produced three distinct stories. Temperature is supposed to increase randomness, but when two out of three responses are nearly identical despite tripling the temperature, you are seeing the limits of how much that dial actually moves the model off its defaults.

On more complex tasks, these tendencies get amplified. When a model falls into a "rut" (always reaching for the same dragons, robots, or astronauts), it suggests that on harder prompts it may gravitate toward the same generic approaches rather than exploring the problem space.

The judging differences matter too, though not in the way you might hope. Claude's judge confidently declared two of its own responses as failures while simultaneously proving they were not. Gemini's judge congratulated itself for recycling someone else's famous story as original micro-fiction. GPT-5.2's judge was the most grounded, focusing on craft rather than tying itself in logical knots. None of the judges were great. All of them should make you cautious about trusting any model's self-assessment without checking the work yourself.

For simple tasks like this, the repetition is a curiosity. For real work (writing marketing copy, generating code alternatives, brainstorming product ideas) these ruts can mean you keep getting the same approach dressed up in slightly different words. That is exactly when switching models with "Restart from here" pays off.

Best AI for Coding: Claude Opus 4.6 Leads

If there is one area with a clear winner right now, it is coding. Claude Opus 4.6 (released February 2026) is the best model I have used for code generation, debugging, and refactoring.

This is not a surprise. There is a gold rush happening in AI for code, and every provider is throwing resources at this use case. But Anthropic is ahead. Opus 4.6 understands project context better, generates cleaner code, and catches edge cases that other models miss.

GPT-5.2 is solid for coding too, but it has a reliability problem. It frequently seems to be down or degraded, which is frustrating when you are in the middle of a session. When it works, it works well. When it does not, you are stuck waiting.

Gemini 3 Pro tops the Code Arena leaderboard, but that has not matched my experience on real projects. It is competent, but "competent" is not the same as "the model I reach for when the code matters."

The cost difference is real, though. Opus 4.6 charges $25 per million output tokens. GPT-5.2 charges $14. Gemini 3 Pro charges $12. For routine coding tasks where you do not need the best possible answer, Sonnet 4.5 at $15 per million output tokens is fast and good enough. Pick the model that matches the stakes.

Best AI for Writing: It Is More Complicated

This is where the leaderboard rankings fall apart.

Opus 4.6 is a genuine improvement for content and writing. This is new. Opus 4.5 was mediocre at writing (great at code, stilted at prose). Version 4.6 fixed this, and it is a noticeable step up.

But "best for writing" depends on what you mean by writing. For technical content, documentation, and structured prose, Opus 4.6 is strong. For conversational writing and tasks where you want the response to feel warm and natural, GPT-5.2 from OpenAI is better. OpenAI's models have a more human tone. Claude's writing can feel precise but a bit formal.

Gemini 3 Pro is what I call "Google-brained." Generic, cautious, and rarely surprising. If you want safe, predictable prose, Gemini delivers that. If you want something with personality or an unexpected angle, look elsewhere.

This is why the "Restart from here" workflow matters so much for writing. I often start with one model, read the response, and immediately switch to another to see if the tone is better. For writing, the right model depends on the piece, the audience, and the voice you want.

When You Ask a Hard Question and Get a Lecture

Here is something most AI comparisons will not talk about: the scolding problem.

Ask any of these models a question on a tricky or sensitive topic, and there is a good chance you will get a disclaimer paragraph telling you to "consult a professional" or "consider multiple perspectives" before you get anything useful.

All of them do this. But not equally.

Gemini does it the most. Ask it anything remotely sensitive and you get a wall of caveats before the actual answer. It is Google's institutional caution baked into the model.

Surprisingly, Anthropic does it the least. Claude Opus 4.6 is more willing to engage directly with difficult questions than either GPT-5.2 or Gemini 3 Pro. This was not what I expected given Anthropic's public emphasis on AI safety, but in practice their models are more direct.

GPT-5.2 falls in the middle. It will give you the answer but wraps it in enough softening language that you sometimes have to dig for the actual point.

When I want the straight answer without the hand-holding, I sometimes reach for Grok (xAI's model) through OpenRouter. Grok has radically different opinions from the mainstream models. It will give you out-from-left-field perspectives that ChatGPT, Claude, and Gemini would never touch. I do not use it as my daily driver, but I am glad it exists for the times when I need a genuinely different take.

Models You Have Not Heard of That Are Worth Trying

The AI landscape is wider than OpenAI, Anthropic, and Google. Through OpenRouter, you can access dozens of models from other providers, and some of them are genuinely good.

Kimi K2.5 (from Moonshot AI) is a standout. It is an open-source Chinese model that is slow but produces quality results at a fraction of the cost. If you are not in a rush and you want a good answer without paying Opus prices, Kimi is worth trying.

Grok from xAI is not a daily-use model for me, but it fills a gap that the big three leave open. When every other model gives you the same cautious, hedged response, Grok offers something genuinely different. Sometimes that is exactly what you need.

OpenRouter is the key here. It gives you one API key that connects to models from xAI, Moonshot, Meta, Mistral, and others. You do not need a separate account with every provider. Add your OpenRouter key, and the entire landscape opens up.

The Pricing Reality

It is worth looking at what these models actually cost per million tokens (as of February 2026):

Flagship models (output pricing):

- Claude Opus 4.6: $25/MTok

- GPT-5.2: $14/MTok

- Gemini 3 Pro: $12/MTok

Mid-tier (good balance of cost and quality):

- Claude Sonnet 4.5: $15/MTok

- Gemini 3 Flash: $3/MTok

Budget:

- GPT-5 mini: $2/MTok

- Kimi K2.5 via OpenRouter: around $2.50/MTok

GPT-5.2 pro exists at $168 per million output tokens. I have not tested it because that pricing is hard to justify for general use.

The practical takeaway: you do not need the most expensive model for every task. Use Opus 4.6 when the code or content really matters. Use Sonnet 4.5 or GPT-5 mini for routine questions. Use Gemini 3 Flash when you need speed and cost efficiency. Match the model to the stakes.

Why No Single AI Subscription Is Enough

If you subscribe to ChatGPT Plus, you get OpenAI's models and nothing else. Subscribe to Claude Pro, same story. Google AI Pro, same.

To access all three providers through their consumer apps, you are looking at around $60/month in subscriptions. And you are still locked into each provider's interface, their usage limits, and their hidden system prompts that change how the models behave.

With your own API keys, you get all of them. Plus OpenRouter. Plus the ability to switch mid-conversation when a model is not delivering. Most months I spend well under what a single subscription would cost, and I have access to every model from every provider.

The "Restart from here" workflow is how real model comparison happens. Not benchmarks. Not cherry-picked demos. You ask your actual question, read the actual answer, and switch when it is not good enough. That is the honest test.

The Bottom Line

There is no "best AI" right now. There is a best AI for coding (Opus 4.6), a warmer AI for conversational tasks (GPT-5.2), a cautious AI for safe corporate prose (Gemini), and a handful of dark horses that fill gaps the big three leave open.

The real advantage is not picking the right model. It is having access to all of them and knowing when to switch.

Try It Yourself

Cumbersome is free for iPhone, iPad, and Mac. Add your API keys for OpenAI, Anthropic, Google AI Studio, and OpenRouter. Every model, every provider, one app. You pay the providers directly, not us. Switch models mid-conversation, run Face/Off Mode to compare responses, and find out which AI actually works best for the way you work.

Bless up! 🙏✨